A recent Surfshark study has spotlighted Meta AI’s data collection practices, revealing the chatbot’s harvesting of 32 out of 35 data types—a 90% capture rate—igniting debates around AI tool data privacy and SME data security concerns. The extensive Meta AI data collection, while enabling advanced functionality, has amplified fears over Meta chatbot privacy risks, particularly for small businesses leveraging AI tools. Enterprise AI data management frameworks now face scrutiny as stakeholders demand greater transparency, balancing innovation with safeguards against potential breaches. With privacy advocates calling for stricter oversight, the study underscores the urgent need to address these vulnerabilities without stifling technological progress. This tension between efficiency and ethics has positioned Meta’s practices as a pivotal case study in modern digital accountability.

Beyond the metrics, the report’s implications resonate across the digital landscape, prompting reevaluations of data governance in tech ecosystems. As businesses assess risks tied to information aggregation, the focus shifts to mitigating exposure through adaptive security protocols and real-time monitoring of AI-driven data flows. While the findings amplify calls for ethical AI frameworks, organizations are now prioritizing layered privacy safeguards to align innovation with compliance, ensuring long-term resilience against evolving threats.

Meta AI Data Collection: A Deep Dive into Privacy Implications

Surfshark’s study underscores Meta’s Meta AI chatbot as the most data-hungry AI tool among competitors, collecting 32 of 35 possible data types. This extensive data aggregation raises critical questions about how SMEs’ operational data is leveraged, stored, and potentially monetized by large tech firms. The sheer volume of data harvested—from user interactions to behavioral patterns—creates vulnerabilities for businesses relying on these tools for competitive insights.

The 90% data collection benchmark highlights Meta’s dominance in data acquisition but also amplifies SME concerns about data ownership and transparency. Enterprises must now evaluate if the benefits of AI-driven tools outweigh the risks of exposing sensitive business data to third-party platforms.

AI Tool Data Privacy Concerns for SMEs

SMEs face a paradox when adopting AI tools: while these technologies enhance efficiency, they often demand extensive data sharing. The Meta AI case study illustrates how SMEs might inadvertently expose customer information or operational strategies through opaque data practices. This duality forces businesses to prioritize privacy frameworks alongside innovation.

Data security concerns are compounded by the lack of standardized transparency from AI providers. SMEs must now seek tools with explicit data governance policies to mitigate risks associated with unregulated data collection practices.

Meta Chatbot Privacy Risks: Unpacking the Data Harvesting Practices

The Meta chatbot’s data harvesting mechanisms, as detailed in the study, include capturing contextual user inputs, search histories, and even third-party application interactions. This level of granularity poses unique risks for SMEs using the tool for customer service automation, potentially exposing proprietary business data.

Critics argue that Meta’s data collection methods exceed operational necessity, risking non-compliance with regulations like GDPR. Companies must now audit AI tool integrations to ensure alignment with data protection standards.

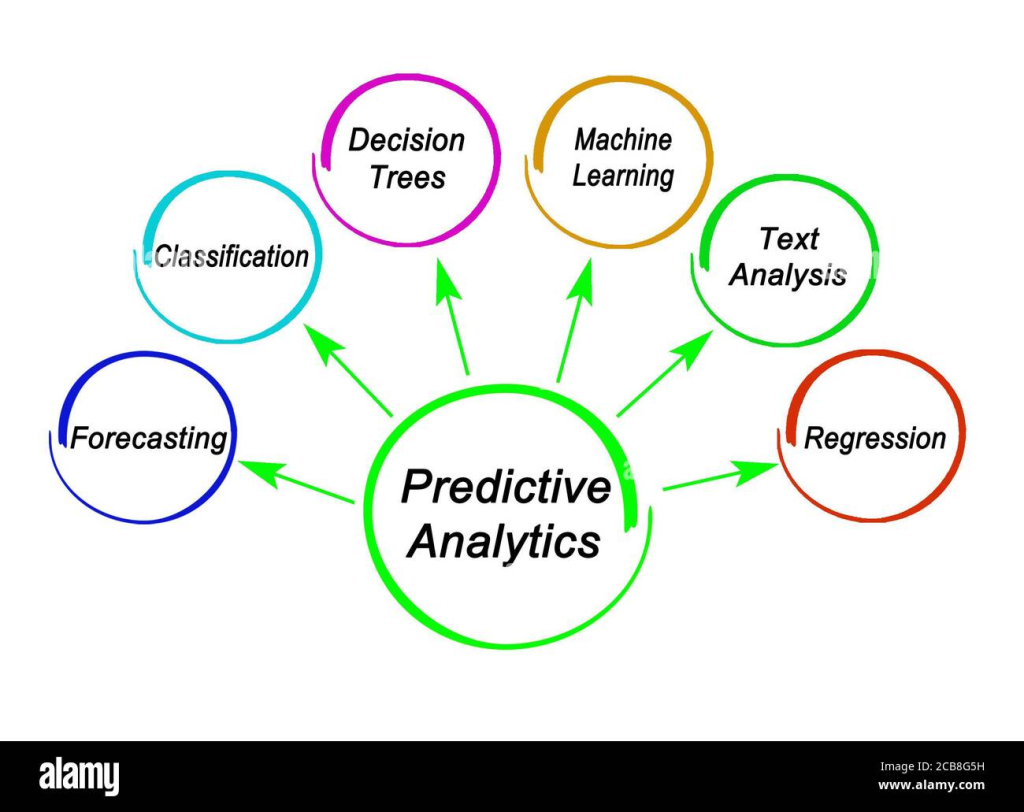

Enterprise AI Data Management Strategies in the Face of Rising Risks

Enterprises are adopting tiered data management systems to compartmentalize sensitive information from third-party AI tools. Strategies include anonymization techniques and restricted data access protocols to mitigate exposure while retaining AI-driven benefits.

Proactive enterprises are also investing in in-house AI solutions and partnerships with vendors offering transparent data policies. This shift reflects a broader trend toward regaining control over data flows in an era of aggressive third-party data collection.

Regulatory Challenges and Compliance in AI Data Handling

The study has intensified calls for stricter AI data governance frameworks. Policymakers are now scrutinizing how data collection practices align with existing regulations, particularly for SMEs that may lack the resources to navigate compliance complexities.

Legal experts emphasize the need for clear liability clauses in AI tool contracts. SMEs must now prioritize vendors with verifiable compliance certifications to avoid legal risks associated with non-compliant data practices.

Frequently Asked Questions

Why should SMEs be concerned about Meta AI’s data collection practices?

Meta AI collects 32 out of 35 possible data types according to Surfshark’s study, raising SME data security concerns. This extensive collection increases exposure to potential data breaches and unauthorized use of sensitive business information.

What specific data types does Meta AI collect that impact enterprise AI data management?

Meta AI captures 32 critical data categories including behavioral patterns, communication logs, and user metadata. This level of granularity raises compliance challenges for enterprises managing AI tools under strict data governance frameworks.

How do Meta chatbot privacy risks affect small businesses using their AI tools?

The chatbot’s 90% data collection rate creates vulnerability to privacy violations. Small businesses may inadvertently expose customer data, complicating GDPR/CCPA compliance and eroding client trust.

What safeguards does Meta implement for AI tool data privacy?

Meta states it uses encryption and anonymization techniques, though the Surfshark study highlights discrepancies between stated policies and actual data-handling practices observed in their chatbot ecosystem.

What should enterprises consider when evaluating AI tools’ data management practices?

Prioritize tools with transparent data collection policies, third-party audits, and granular control over data retention. Compare with Meta AI’s approach through independent studies like Surfshark’s analysis.

| Aspect | Details | Impact |

|---|---|---|

| Data Collected | 32/35 data types (90% of total) | Highest data intensity among analyzed chatbots |

| Focus of Concern | Small and medium enterprises (SMEs) reliant on AI tools | Increases privacy/liability risks for SMEs |

| Study Overview | Conducted by cybersecurity firm Surfshark | Highlights urgent need for industry transparency |

Summary

Meta AI data collection practices, as revealed by Surfshark’s analysis, demonstrate unprecedented scope with 32 out of 35 possible data types captured, making it the most data-intensive chatbot in its class. This raises critical privacy considerations for SMEs dependent on AI tools, underscoring the necessity for transparent data policies and regulatory scrutiny in the AI industry. The findings emphasize Meta AI’s dominance in data aggregation while prompting enterprises to reassess their digital strategies to align with evolving data protection expectations.